Learn

Kappa Value Explained | Statistics in Physiotherapy

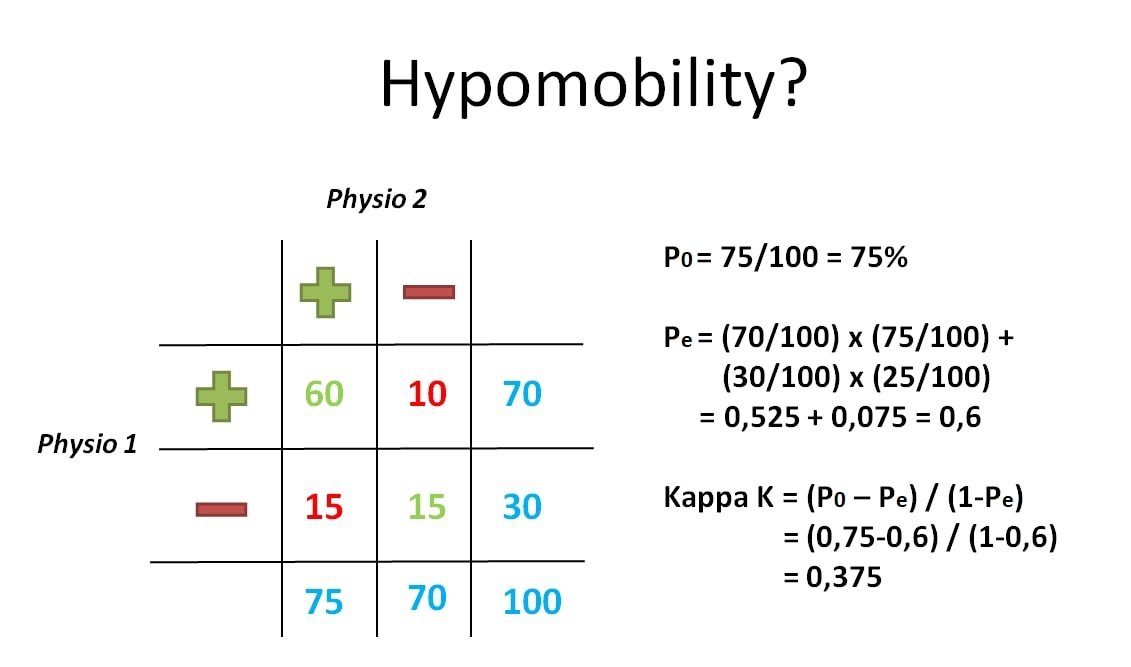

Before you read this post, you should have a good grasp of the topic of reliability. Check out our post on reliability here. Precision or inter-rater reliability is often reported as a Kappa statistic. The Kappa value can give you a quantitative measure of the agreement in any situation in which two or more independent observers are evaluating the same thing. For example, imagine that two physiotherapists are evaluating the lumbar vertebrae L5 on hypomobility in 100 patients.

Physio 1 and physio 2 agree that patients are hypomobile in 60 cases and not hypermobile in 15 cases so they would have 75% of agreement and in the other cases, they have different opinions. However, if both of them randomly evaluate the patients they would sometimes agree just by chance. The Kappa value takes this chance into account. It is calculated by the agreement that is actually present, called ‘observed agreement,’ compared to how much agreement would be expected by chance, called expected agreement.

The expected agreement is calculated with the following formula: multiply the positive answers of physio 1 with the positive answers of physio 2 right. So you have 70/100 and 75/100 and you multiply those two values with each other.

Then you add them to the negative answers of physio 1 (30), and the negative answers of physio 2 (25) which are also divided by 100 and multiplied with each other, and then you end up with 0.525 plus 0.075, so you have an expected agreement of 0.6.

Now let’s finally calculate our Kappa value. What you do is, is that you take your actual outcome P0, so in our case, that’s 0.75 and you subtract the expected outcome with 0.6 and you divided by 1 minus the expected outcome, so it’s 1 minus 0.6. Then you end up with a kappa value of 0.375.

So what does the kappa value of 0.375 mean? According to the commonly used scale by Landis & Koch (1977), this would mean that the two physios have a fair agreement on which patients are hypomobile and which ones are not. Note that a kappa value of 0 or less means no agreement at all, while a value of 1 means ‘perfect agreement’.

21 OF THE MOST USEFUL ORTHOPAEDIC TESTS IN CLINICAL PRACTICE

Like what you’re learning?

BUY THE FULL PHYSIOTUTORS ASSESSMENT BOOK

- 600+ Pages e-Book

- Interactive Content (Direct Video Demonstration, PubMed articles)

- Statistical Values for all Special Tests from the latest research

- Available in 🇬🇧 🇩🇪 🇫🇷 🇪🇸 🇮🇹 🇵🇹 🇹🇷

- And much more!